DP-203: Data Engineering on Microsoft Azure

Which Azure Data Factory component contains the transformation logic or the analysis commands of the Azure Data Factory’s work?

Linked Services

Datasets

Activities

Pipelines

Answer is Activities

Activities contains the transformation logic or the analysis commands of the Azure Data Factory’s work. Linked Services are objects that are used to define the connection to data stores or compute resources in Azure. Datasets represent data structures within the data store that is being referenced by the Linked Service object. Pipelines are a logical grouping of activities.

A pipeline JSON definition is embedded into an Activity JSON definition. True of False?

True

False

Answer is False

an Activity JSON definition is embedded into a pipeline JSON definition.

Which transformation in the Mapping Data Flow is used to routes data rows to different streams based on matching conditions?

Lookup

Conditional Split

Select

Answer is Conditional Split

A Conditional Split transformation routes data rows to different streams based on matching conditions. The conditional split transformation is similar to a CASE decision structure in a programming language. A Lookup transformation is used to add reference data from another source to your Data Flow. The Lookup transform requires a defined source that points to your reference table and matches on key fields. A Select transformation is used for selecting and/or defining your columns

Which transformation is used to load data into a data store or compute resource?

Window

Source

Sink

Answer is Sink

A Sink transformation allows you to choose a dataset definition for the destination output data. You can have as many sink transformations as your data flow requires.

A Window transformation is where you will define window-based aggregations of columns in your data streams.

A Source transformation configures your data source for the data flow. When designing data flows, your first step will always be configuring a source transformation.

What is the DataFrame method call to create temporary view?

createOrReplaceTempView()

ViewTemp()

createTempViewDF()

Answer is createOrReplaceTempView()

createOrReplaceTempView(). This is the correct method name for creating temporary views in DataFrames and createTempViewDF() are incorrect method name.

You have an Azure Storage account and an Azure SQL data warehouse in the UK South region.

You need to copy blob data from the storage account to the data warehouse by using Azure Data Factory.

The solution must meet the following requirements:

- Ensure that the data remains in the UK South region at all times.

- Minimize administrative effort.

Which type of integration runtime should you use?

Azure integration runtime

Self-hosted integration runtime

Azure-SSIS integration runtime

Answer is Azure integration runtime

Self-hosted integration runtime is to be used On-premises.

References:

https://docs.microsoft.com/en-us/azure/data-factory/concepts-integration-runtime

You develop data engineering solutions for a company.

You must integrate the company's on-premises Microsoft SQL Server data with Microsoft Azure SQL Database. Data must be transformed incrementally.

You need to implement the data integration solution.

Which tool should you use to configure a pipeline to copy data?

Use the Copy Data tool with Blob storage linked service as the source

Use Azure PowerShell with SQL Server linked service as a source

Use Azure Data Factory UI with Blob storage linked service as a source

Use the .NET Data Factory API with Blob storage linked service as the source

Answer is "Use Azure Data Factory UI with Blob storage linked service as a source"

The Integration Runtime is a customer managed data integration infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments.

A linked service defines the information needed for Azure Data Factory to connect to a data resource. We have three resources in this scenario for which linked services are needed:

- On-premises SQL Server

- Azure Blob Storage

- Azure SQL database

Note: Azure Data Factory is a fully managed cloud-based data integration service that orchestrates and automates the movement and transformation of data. The key concept in the ADF model is pipeline. A pipeline is a logical grouping of Activities, each of which defines the actions to perform on the data contained in Datasets. Linked services are used to define the information needed for Data Factory to connect to the data resources.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-sql-azure-adf

You have an Azure virtual machine that has Microsoft SQL Server installed. The server contains a table named Table1.

You need to copy the data from Table1 to an Azure Data Lake Storage Gen2 account by using an Azure Data Factory V2 copy activity.

Which type of integration runtime should you use?

Azure integration runtime

self-hosted integration runtime

Azure-SSIS integration runtime

Answer is self-hosted integration runtime

Copying between a cloud data source and a data source in private network: if either source or sink linked service points to a self-hosted IR, the copy activity is executed on that self-hosted Integration Runtime.

References:

https://docs.microsoft.com/en-us/azure/data-factory/concepts-integration-runtime#determining-which-ir-to-use

You have a new Azure Data Factory environment.

You need to periodically analyze pipeline executions from the last 60 days to identify trends in execution durations. The solution must use Azure Log Analytics to query the data and create charts.

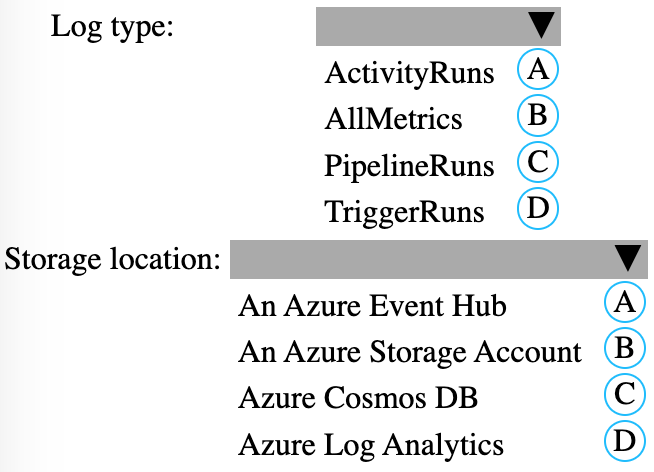

Which diagnostic settings should you configure in Data Factory?

A-B

A-C

B-C

B-D

C-A

C-B

D-A

D-C

Answer is C - B

Log type: PipelineRuns

A pipeline run in Azure Data Factory defines an instance of a pipeline execution.

Storage location: An Azure Storage account

Data Factory stores pipeline-run data for only 45 days. Use Monitor if you want to keep that data for a longer time. With Monitor, you can route diagnostic logs for analysis. You can also keep them in a storage account so that you have factory information for your chosen duration.

Save your diagnostic logs to a storage account for auditing or manual inspection. You can use the diagnostic settings to specify the retention time in days.

References:

https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipeline-execution-triggers

https://docs.microsoft.com/en-us/azure/data-factory/monitor-using-azure-monitor

You have an activity in an Azure Data Factory pipeline. The activity calls a stored procedure in a data warehouse in Azure Synapse Analytics and runs daily.

You need to verify the duration of the activity when it ran last.

What should you use?

the

sys.dm_pdw_wait_stats data management view in Azure Synapse Analytican Azure Resource Manager template

activity runs in Azure Monitor

Activity log in Azure Synapse Analytics

Answer is activity runs in Azure Monitor

Monitor activity runs. To get a detailed view of the individual activity runs of a specific pipeline run, click on the pipeline name.

The list view shows activity runs that correspond to each pipeline run. Hover over the specific activity run to get run-specific information such as the JSON input, JSON output, and detailed activity-specific monitoring experiences. On this screen you can check the Duration.

Incorrect Answers:

sys.dm_pdw_wait_stats holds information related to the SQL Server OS state related to instances running on the different nodes.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/monitor-visually