DP-203: Data Engineering on Microsoft Azure

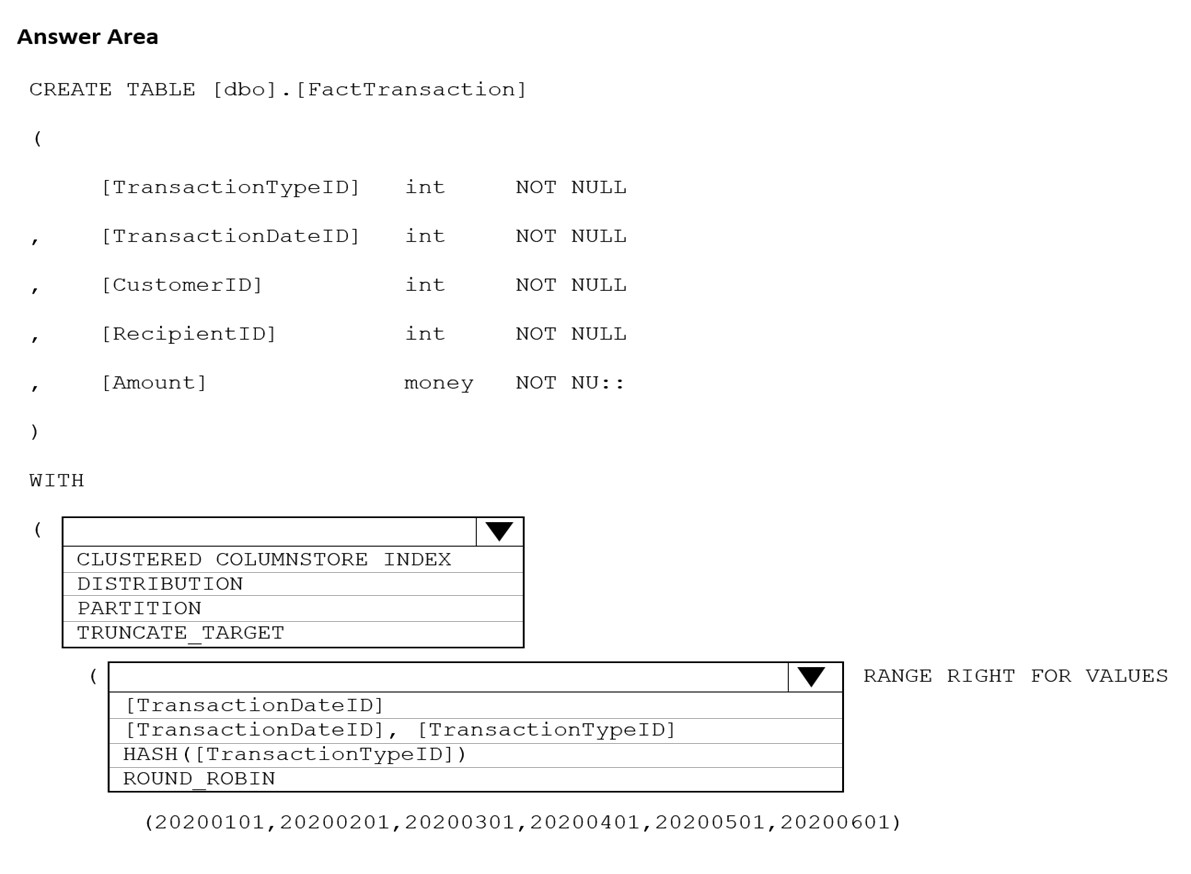

You are building an Azure Synapse Analytics dedicated SQL pool that will contain a fact table for transactions from the first half of the year 2020.

You need to ensure that the table meets the following requirements:

● Minimizes the processing time to delete data that is older than 10 years

● Minimizes the I/O for queries that use year-to-date values

How should you complete the Transact-SQL statement?

Answer is

Box 1: PARTITION

RANGE RIGHT FOR VALUES is used with PARTITION.

Part 2: [TransactionDateID]

Partition on the date column.

Example: Creating a RANGE RIGHT partition function on a datetime column

The following partition function partitions a table or index into 12 partitions, one for each month of a year's worth of values in a datetime column.

CREATE PARTITION FUNCTION [myDateRangePF1] (datetime)

AS RANGE RIGHT FOR VALUES ('20030201', '20030301', '20030401',

'20030501', '20030601', '20030701', '20030801',

'20030901', '20031001', '20031101', '20031201');

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-partition-function-transact-sql

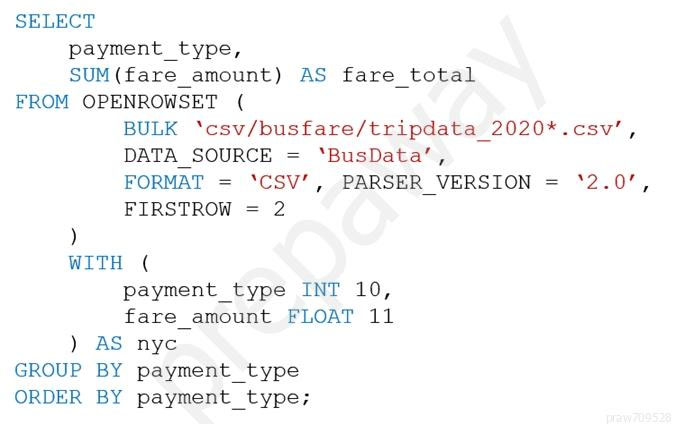

You are performing exploratory analysis of the bus fare data in an Azure Data Lake Storage Gen2 account by using an Azure Synapse Analytics serverless SQL pool.

You execute the Transact-SQL query shown in the following exhibit.

What do the query results include?

Only CSV files in the tripdata_2020 subfolder.

All files that have file names that beginning with

All CSV files that have file names that contain

Only CSV that have file names that beginning with

Answer is Only CSV that have file names that beginning with

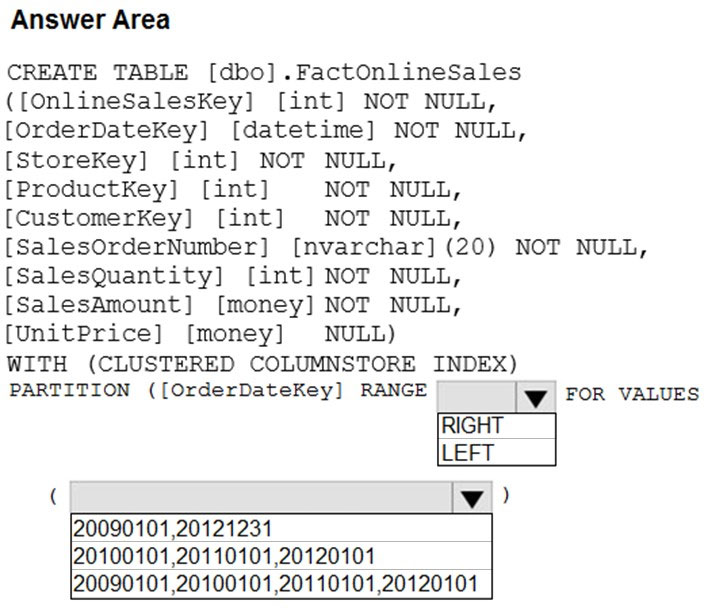

You have an enterprise data warehouse in Azure Synapse Analytics that contains a table named FactOnlineSales. The table contains data from the start of 2009 to the end of 2012.

You need to improve the performance of queries against FactOnlineSales by using table partitions. The solution must meet the following requirements:

● Create four partitions based on the order date.

● Ensure that each partition contains all the orders places during a given calendar year.

How should you complete the T-SQL command?

Range Left or Right, both are creating similar partition but there is difference in comparison

For example: in this scenario, when you use LEFT and 20100101,20110101,20120101

Partition will be, datecol<=20100101, datecol>20100101 and datecol<=20110101, datecol>20110101 and datecol<=20120101, datecol>20120101

But if you use range RIGHT and 20100101,20110101,20120101

Partition will be, datecol<20100101, datecol>=20100101 and datecol<20110101, datecol>=20110101 and datecol<20120101, datecol>=20120101

In this example, Range RIGHT will be suitable for calendar comparison Jan 1st to Dec 31st

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-partition-function-transact-sql?view=sql-server-ver15

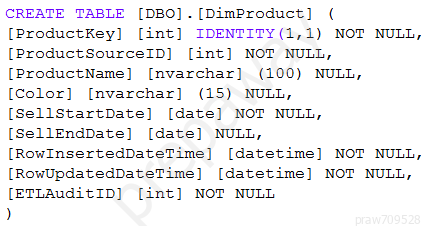

You need to implement a Type 3 slowly changing dimension (SCD) for product category data in an Azure Synapse Analytics dedicated SQL pool.

You have a table that was created by using the following Transact-SQL statement.

Which two columns should you add to the table? Each correct answer presents part of the solut

[EffectiveStartDate] [datetime] NOT NULL,

[CurrentProductCategory] [nvarchar] (100) NOT NULL,

[EffectiveEndDate] [datetime] NULL,

[ProductCategory] [nvarchar] (100) NOT NULL,

[OriginalProductCategory] [nvarchar] (100) NOT NULL,

Answers are; [CurrentProductCategory] [nvarchar] (100) NOT NULL, and [OriginalProductCategory] [nvarchar] (100) NOT NULL,

A Type 3 SCD supports storing two versions of a dimension member as separate columns. The table includes a column for the current value of a member plus either the original or previous value of the member. So Type 3 uses additional columns to track one key instance of history, rather than storing additional rows to track each change like in a Type 2 SCD.

This type of tracking may be used for one or two columns in a dimension table. It is not common to use it for many members of the same table. It is often used in combination with Type 1 or Type 2 members.

Reference:

https://k21academy.com/microsoft-azure/azure-data-engineer-dp203-q-a-day-2-live-session-review/

You are designing an Azure Stream Analytics solution that will analyze Twitter data.

You need to count the tweets in each 10-second window. The solution must ensure that each tweet is counted only once.

Solution: You use a hopping window that uses a hop size of 10 seconds and a window size of 10 seconds.

Does this meet the goal?

Yes

No

Answer is Yes

Instead use a tumbling window. Tumbling windows are a series of fixed-sized, non-overlapping and contiguous time intervals. Unlike tumbling windows, hopping windows model scheduled overlapping windows. A hopping window specification consist of three parameters: the timeunit, the windowsize (how long each window lasts) and the hopsize (by how much each window moves forward relative to the previous one). Additionally, offsetsize may be used as an optional fourth parameter. Note that a tumbling window is simply a hopping window whose ‘hop’ is equal to its ‘size’.

Reference:

https://docs.microsoft.com/en-us/stream-analytics-query/tumbling-window-azure-stream-analytics

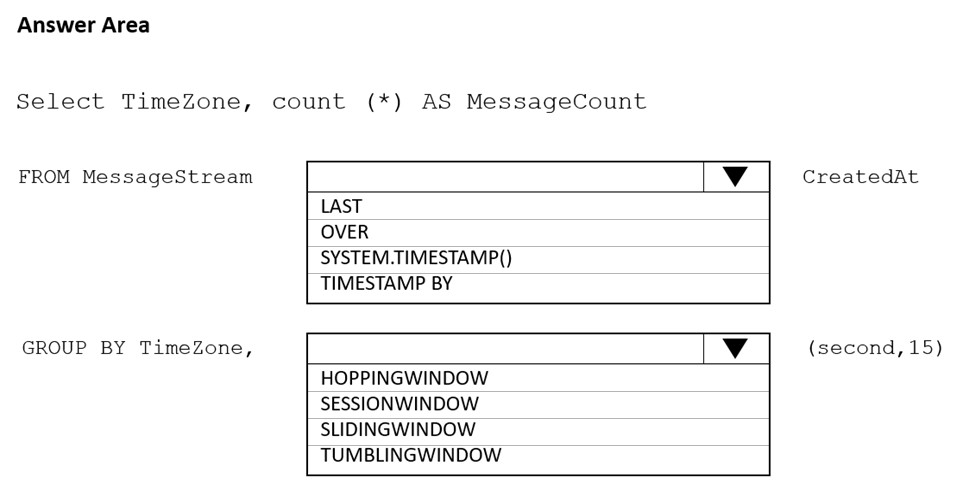

You are designing an Azure Stream Analytics solution that receives instant messaging data from an Azure Event Hub.

You need to ensure that the output from the Stream Analytics job counts the number of messages per time zone every 15 seconds.

How should you complete the Stream Analytics query? To answer, select the appropriate options in the answer area.

Box 1: timestamp by

Box 2: Tumbling window

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an event cannot belong to more than one tumbling window.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files in container1 into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of

Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: You use a dedicated SQL pool to create an external table that has an additional DateTime column.

Does this meet the goal?

Yes

No

Answer is No

Instead use the derived column transformation to generate new columns in your data flow or to modify existing fields.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/data-flow-derived-column

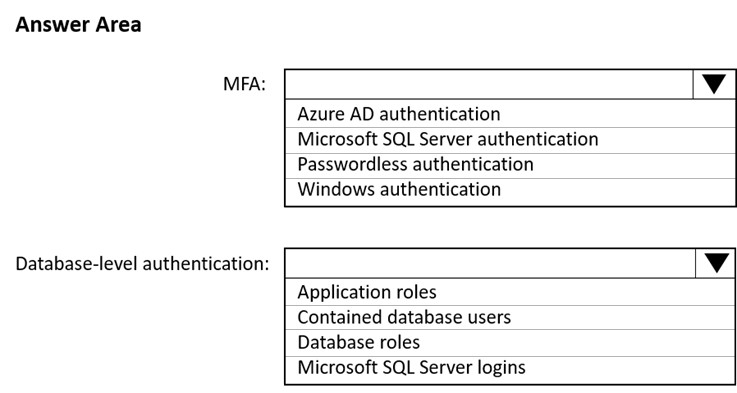

You have an Azure subscription that is linked to a hybrid Azure Active Directory (Azure AD) tenant. The subscription contains an Azure Synapse Analytics SQL pool named Pool1.

You need to recommend an authentication solution for Pool1. The solution must support multi-factor authentication (MFA) and database-level authentication.

Which authentication solution or solutions should you include m the recommendation?

Box 1: Azure AD authentication

Azure AD authentication has the option to include MFA.

Box 2: Contained database users

Azure AD authentication uses contained database users to authenticate identities at the database level.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/authentication-mfa-ssms-overview

https://docs.microsoft.com/en-us/azure/azure-sql/database/authentication-aad-overview

You are designing an Azure Synapse Analytics dedicated SQL pool.

You need to ensure that you can audit access to Personally Identifiable Information (PII).

What should you include in the solution?

column-level security

dynamic data masking

row-level security (RLS)

sensitivity classifications

Answer is sensitivity classifications

Data Discovery & Classification is built into Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics. It provides basic capabilities for discovering, classifying, labeling, and reporting the sensitive data in your databases.

Your most sensitive data might include business, financial, healthcare, or personal information. Discovering and classifying this data can play a pivotal role in your organization's information-protection approach. It can serve as infrastructure for:

● Helping to meet standards for data privacy and requirements for regulatory compliance.

● Various security scenarios, such as monitoring (auditing) access to sensitive data.

● Controlling access to and hardening the security of databases that contain highly sensitive data.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/data-discovery-and-classification-overview

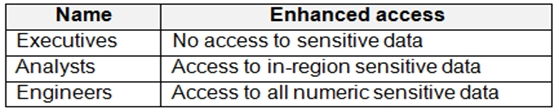

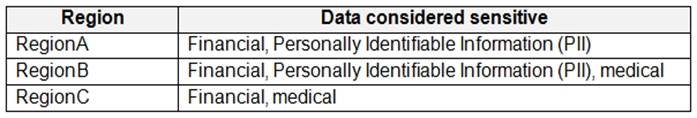

You are designing an Azure Synapse Analytics dedicated SQL pool.

Groups will have access to sensitive data in the pool as shown in the following table.

You have policies for the sensitive data. The policies vary be region as shown in the following table.

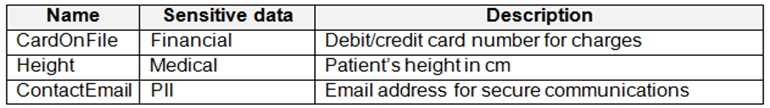

You have a table of patients for each region. The tables contain the following potentially sensitive columns.

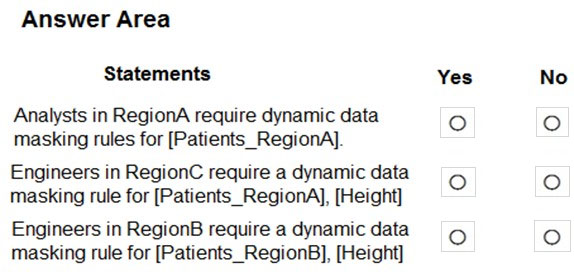

You are designing dynamic data masking to maintain compliance.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Answer is No - No - Yes

Statement 1: Analysts in Region A have access to (all) the following sensitive data in region A: CardOnFile, Heigth and ContactEmail. Since financial (CardOnFike) and PII (ContactEmail) are considered sensitive data in Region A, hence you don't need any dynamic data masking for Height: so NO. Statement 2 & 3: Engineers have access to all numeric sensitive data (which means in every region). So they have access to height. Height is medical and therefore only sensitive in Region B according to the second table, but not in Region A. So Statement 2 is “No” and Statement 3 is “Yes”

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview