DP-600: Implementing Analytics Solutions Using Microsoft Fabric

You have a Fabric tenant.

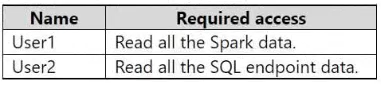

You need to configure OneLake security for users shown in the following table.

The solution must follow the principle of least privilege.

Which permission should you assign to each user?

Check the answer section

Answer is ReadAll - ReadData

ReadData permission on SQL endpoint to access data without SQL policy.

ReadAll permission on the lakehouse to access all data using Apache Spark.

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-sharing#sharing-and-permissions

You have a Fabric tenant that contains a semantic model. The model contains 15 tables.

You need to programmatically change each column that ends in the word Key to meet the following requirements:

- Hide the column.

- Set Nullable to False

- Set Summarize By to None.

- Set Available in MDX to False.

- Mark the column as a key column.

What should you use?

Microsoft Power BI Desktop

ALM Toolkit

Tabular Editor

DAX Studio

Answer is Tabular Editor

Tabular Editor allows you to use C# scripts to automate these changes. In PBI Desktop you can do this only manually.

Here's why the other options are not ideal for this scenario:

1) Microsoft Power BI Desktop: doesn't offer functionalities for bulk programmatic changes based on naming conventions.

2) ALM Toolkit: it's not specifically designed for modifying the structure of semantic models within the tool itself.

3) DAX Studio doesn't provide functionalities for directly modifying model structure or properties en masse.

You have an Azure Data Lake Storage Gen2 account named storage1 that contains a Parquet file named sales.parquet.

You have a Fabric tenant that contains a workspace named Workspace1.

Using a notebook in Workspace1, you need to load the content of the file to the default lakehouse. The solution must ensure that the content will display automatically as a table named Sales in Lakehouse explorer.

How should you complete the code?

Check the answer area

Answer is Delta - Sales

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-notebook-load-data

You have a Fabric tenant that contains lakehouse named Lakehouse1. Lakehouse1 contains a Delta table with eight columns.

You receive new data that contains the same eight columns and two additional columns.

You create a Spark DataFrame and assign the DataFrame to a variable named df. The DataFrame contains the new data.

You need to add the new data to the Delta table to meet the following requirements:

- Keep all the existing rows.

- Ensure that all the new data is added to the table.

How should you complete the code?

Check the answer area

Answer is Append, mergeSchema, true

1. Append

--> Because of "Keep all the existing rows"

2. mergeSchema, true

--> Because there will be 2 additional columns, so it's they are not deleted from the new version. If they would have been deleted from the new version, you would have to use "overwriteSchema, true". Since that option replaces the existing schema with the schema of the new dataframe. mergeSchema, on the contrary, allows the addition of new columns rather than overwriting the schema.

Reference:

https://learn.microsoft.com/en-us/azure/databricks/delta/update-schema

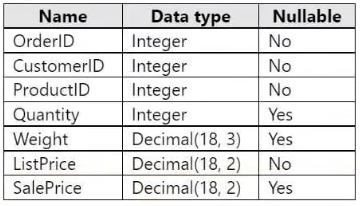

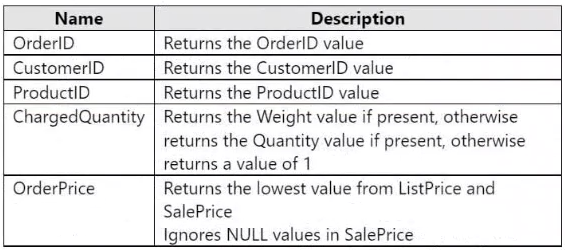

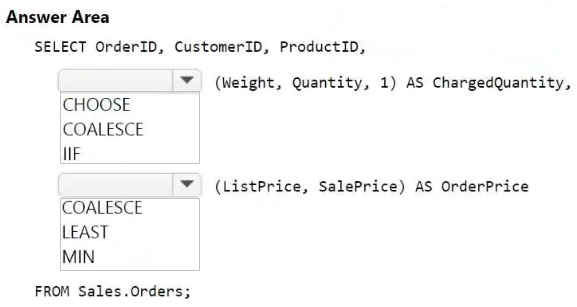

You have a Fabric warehouse that contains a table named Sales.Orders. Sales.Orders contains the following columns.

You need to write a T-SQL query that will return the following columns.

How should you complete the code?

Check the answer area

Answer is Coalesce, Least

Least because, if one or more arguments aren't NULL, then NULL arguments are ignored during comparison. If all arguments are NULL, then LEAST returns NULL.

Reference:

https://learn.microsoft.com/de-de/sql/t-sql/language-elements/coalesce-transact-sql?view=sql-server-ver16

https://learn.microsoft.com/de-de/sql/t-sql/functions/logical-functions-least-transact-sql?view=sql-server-ver16

You have a Fabric tenant that contains a Microsoft Power BI report named Report1. Report1 includes a Python visual.

Data displayed by the visual is grouped automatically and duplicate rows are NOT displayed.

You need all rows to appear in the visual.

What should you do?

Reference the columns in the Python code by index.

Modify the Sort Column By property for all columns.

Add a unique field to each row.

Modify the Summarize By property for all columns.

Answer is Add a unique field to each row.

By adding a unique field to each row, you ensure that Power BI treats each row as distinct. This can be achieved by incorporating a column that contains unique values for each row (e.g., a row number or a unique identifier). When this unique field is included in the dataset used by the Python visual, Power BI will not aggregate the rows because it recognizes each one as different due to the unique identifier.

Reference:

https://learn.microsoft.com/en-us/power-bi/connect-data/desktop-python-visuals

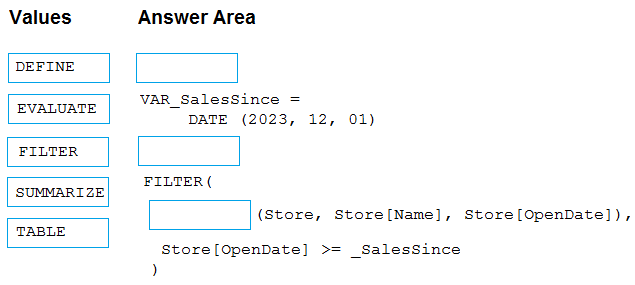

You have a Fabric tenant that contains a semantic model. The model contains data about retail stores.

You need to write a DAX query that will be executed by using the XMLA endpoint. The query must return a table of stores that have opened since December 1, 2023.

How should you complete the DAX expression?

Check the answer section

Answer is DEFINE - EVALUATE - SUMMARIZE

You are creating a semantic model in Microsoft Power BI Desktop. You plan to make bulk changes to the model by using the Tabular Model Definition Language (TMDL) extension for Microsoft Visual Studio Code. You need to save the semantic model to a file. Which file format should you use?

PBIP

PBIX

PBIT

PBIDS

Answer is PBIP

The PBIP will create one file and two folders, PBIP.Dataset contains definition folder that is use to host the .tmdl files

Reference:

https://learn.microsoft.com/en-us/power-bi/developer/projects/projects-overview

https://powerbiblogscdn.azureedge.net/wp-content/uploads/2024/02/tmdlPreviewFeature.png

You have a Fabric workspace that contains a DirectQuery semantic model. The model queries a data source that has 500 million rows.

You have a Microsoft Power Bi report named Report1 that uses the model. Report1 contains visuals on multiple pages.

You need to reduce the query execution time for the visuals on all the pages.

What are two features that you can use?

user-defined aggregations

automatic aggregation

query caching

OneLake integration

Answers are;

A. user-defined aggregations

B. automatic aggregation

A - Custom aggregations enables PBI to not perform a Full Scan of the underlying datasets.

B - The AutoAggregations feature automatically creates aggregations on large datasets and based on query optimization determines the total number of rows that requires processing based on the generated query plan.

Incorrect to this question context:

C - Although caching helps improve performance on large datasets, it doesn't support DirectQuery. Also, it is a feature available in PBI Service that is automatic and needs no intervention from the user.

Reference:

https://learn.microsoft.com/en-us/power-bi/transform-model/aggregations-advanced

https://learn.microsoft.com/en-us/power-bi/enterprise/aggregations-auto

https://learn.microsoft.com/en-us/power-bi/connect-data/power-bi-query-caching

You have a Fabric tenant that uses a Microsoft Power BI Premium capacity.

You need to enable scale-out for a semantic model.

What should you do first?

At the semantic model level, set Large dataset storage format to Off.

At the tenant level, set Create and use Metrics to Enabled.

At the semantic model level, set Large dataset storage format to On.

At the tenant level, set Data Activator to Enabled.

Answer is At the semantic model level, set Large dataset storage format to On.

Reference:

https://learn.microsoft.com/en-us/power-bi/enterprise/service-premium-scale-out-configure