DP-600: Implementing Analytics Solutions Using Microsoft Fabric

You need to create a data loading pattern for a Type 1 slowly changing dimension (SCD).

Which two actions should you include in the process?

Update rows when the non-key attributes have changed.

Insert new rows when the natural key exists in the dimension table, and the non-key attribute values have changed.

Update the effective end date of rows when the non-key attribute values have changed.

Insert new records when the natural key is a new value in the table.

Answers are;

A. Update rows when the non-key attributes have changed.

D. Insert new records when the natural key is a new value in the table.

AD = SCD1, BC = SCD2

Type 1 SCD does not preserve history, therefore no end dates for table entries exists.

Reference:

https://learn.microsoft.com/en-us/training/modules/populate-slowly-changing-dimensions-azure-synapse-analytics-pipelines/3-choose-between-dimension-types

You have an Azure Repos repository named Repo1 and a Fabric-enabled Microsoft Power BI Premium capacity. The capacity contains two workspaces named Workspace1 and Workspace2. Git integration is enabled at the workspace level.

You plan to use Microsoft Power BI Desktop and Workspace1 to make version-controlled changes to a semantic model stored in Repo1. The changes will be built and deployed to Workspace2 by using Azure Pipelines.

You need to ensure that report and semantic model definitions are saved as individual text files in a folder hierarchy. The solution must minimize development and maintenance effort.

In which file format should you save the changes?

PBIP

PBIDS

PBIT

PBIX

Answer is PBIP

PBIP (Power BI Project):

-> PBIP format is designed to work with version control systems like Azure Repos. It breaks down Power BI artifacts into individual files that can be managed and versioned separately, facilitating better collaboration and change tracking.

-> Folder Hierarchy: It saves the project structure in a folder hierarchy, where each component of the Power BI project (like datasets, reports, data sources) is stored in separate files.

-> Text-Based: Being a text-based format, it integrates well with Git repositories and supports diff and merge operations.

Reference:

https://learn.microsoft.com/en-us/power-bi/developer/projects/projects-overview

You have a Fabric workspace named Workspace1 that contains a lakehouse named Lakehouse1.

In Workspace1, you create a data pipeline named Pipeline1.

You have CSV files stored in an Azure Storage account.

You need to add an activity to Pipeline1 that will copy data from the CSV files to Lakehouse1. The activity must support Power Query M formula language expressions.

Which type of activity should you add?

Dataflow

Notebook

Script

Copy data

Answer is Dataflow

Power Query M Support: Dataflows in Azure Data Factory and Synapse Analytics support the Power Query M formula language, enabling you to perform complex transformations and data manipulations as part of the data ingestion process.

Transformations: Dataflows allow for a wide range of data transformation capabilities which are especially useful when working with CSV files to cleanse, aggregate, or reshape data before loading it into the destination.

You are the administrator of a Fabric workspace that contains a lakehouse named Lakehouse1. Lakehouse1 contains the following tables:

Table1: A Delta table created by using a shortcut

Table2: An external table created by using Spark

Table3: A managed table

You plan to connect to Lakehouse1 by using its SQL endpoint.

What will you be able to do after connecting to Lakehouse1?

Read Table3.

Update the data Table3.

Read Table2.

Update the data in Table1.

Answer is Read Table3.

Answer is A, the managed tables can be read from the connection point.

B & D is out as you can’t update a table in lakehouse using SQL endpoint as this is read only. You will need to use spark or dataflows.

C is out because when you create external table using spark, you can see the table from the lakehouse but you can’t see the table from SQL endpoint let alone ready.

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-sql-analytics-endpoint

You have a Fabric tenant that contains a warehouse.

You use a dataflow to load a new dataset from OneLake to the warehouse.

You need to add a PowerQuery step to identify the maximum values for the numeric columns.

Which function should you include in the step?

Table.MaxN

Table.Max

Table.Range

Table.Profile

Answer is Table.Profile

We should use the

Table.Profile to identify the maximum values for the numeric columns because only Table.Profile returns the maximum values for each column.

The

Table.Max returns the largest row in the table

Reference:

Table.Profile - https://learn.microsoft.com/en-us/powerquery-m/table-profile

Table.Max - https://learn.microsoft.com/en-us/powerquery-m/table-max

You have a Fabric workspace named Workspace1 that contains a dataflow named Dataflow1. Dataflow1 has a query that returns 2,000 rows.

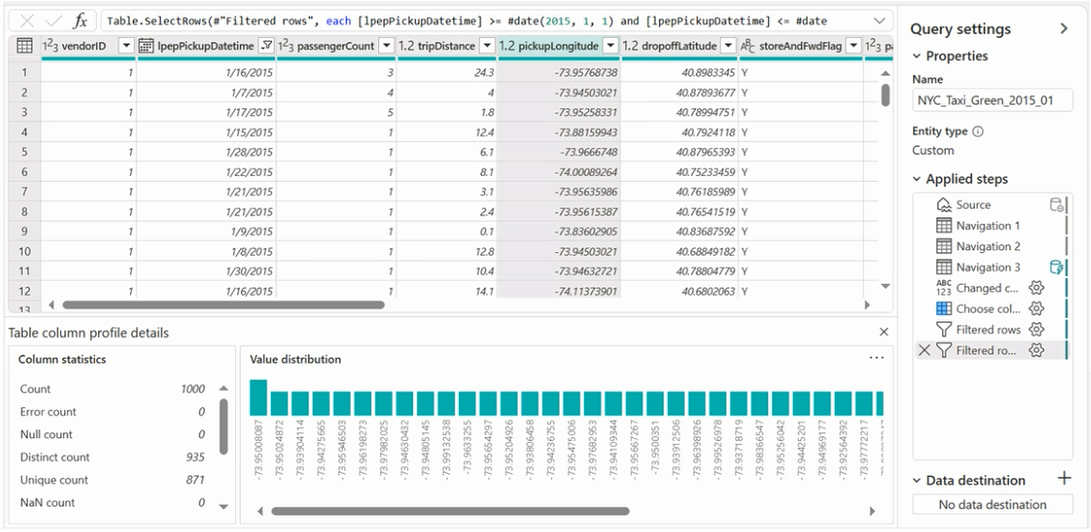

You view the query in Power Query as shown in the following exhibit.

What can you identify about the pickupLongitude column?

The column has duplicate values.

All the table rows are profiled.

The column has missing values.

There are 935 values that occur only once.

Answer is The column has duplicate values.

We see a count of 1000 (which is the limit by default) we do not know all the data is read, but we can see of the 1000 distinct is less and so we have duplicate values.

B - Not all the rows are profiled in the sample (only 1000 of 2000)

C- From the column statistics, you don't have any missing values in the sample

D- The values that occur only once are 871 (unique count)

You have a Fabric tenant named Tenant1 that contains a workspace named WS1. WS1 uses a capacity named C1 and contains a dataset named DS1.

You need to ensure read-write access to DS1 is available by using XMLA endpoint.

What should be modified first?

the DS1 settings

the WS1 settings

the C1 settings

the Tenant1 settings

Answer is the C1 settings

As XMLA is set to Read-Only first, you must go to the capacity settings to enable read-write.

Reference:

https://learn.microsoft.com/en-us/power-bi/enterprise/service-premium-connect-tools#enable-xmla-read-write

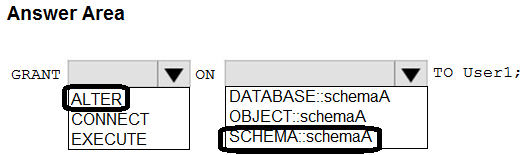

You have a Fabric tenant that contains a warehouse named Warehouse1. Warehouse1 contains three schemas named schemaA, schemaB, and schemaC.

You need to ensure that a user named User1 can truncate tables in schemaA only.

How should you complete the T-SQL statement?

Check the answer section

This statement allows to alter (which includes truncating) tables within the specified schema. It ensures that the permission is restricted to schemaA and does not grant access to other schemas or objects.

Reference:

https://learn.microsoft.com/en-us/sql/t-sql/statements/grant-schema-permissions-transact-sql?view=sql-server-ver16

You have a Fabric tenant that contains 30 CSV files in OneLake. The files are updated daily.

You create a Microsoft Power BI semantic model named Model1 that uses the CSV files as a data source. You configure incremental refresh for Model1 and publish the model to a Premium capacity in the Fabric tenant.

When you initiate a refresh of Model1, the refresh fails after running out of resources.

What is a possible cause of the failure?

Query folding is occurring.

Only refresh complete days is selected.

XMLA Endpoint is set to Read Only.

Query folding is NOT occurring.

The delta type of the column used to partition the data has changed.

Answer is The delta type of the column used to partition the data has changed.

Query folding is not applicable with csv files, which rules out A,D answers.

The provided Microsoft link related to "problem-loading-data-takes-too-long", states two causes, one related to query folding (we've already ruled it out) and another one related to the data type, which in turn leads us to answer "The delta type of the column used to partition the data has changed."

Reference:

https://learn.microsoft.com/en-us/power-bi/connect-data/incremental-refresh-troubleshoot#problem-loading-data-takes-too-long

You have a Fabric tenant that contains a complex semantic model. The model is based on a star schema and contains many tables, including a fact table named Sales.

You need to create a diagram of the model. The diagram must contain only the Sales table and related tables.

What should you use from Microsoft Power BI Desktop?

data categories

Data view

Model view

DAX query view

Answer is Model view

In the Model view, it is possible to analyze the semantic model and create new layouts.