DP-600: Implementing Analytics Solutions Using Microsoft Fabric

You have a Fabric tenant that contains a semantic model named Model1. Model1 uses Import mode. Model1 contains a table named Orders. Orders has 100 million rows and the following fields.

You need to reduce the memory used by Model1 and the time it takes to refresh the model.

Which two actions should you perform?

Split OrderDateTime into separate date and time columns.

Replace TotalQuantity with a calculated column.

Convert Quantity into the Text data type.

Replace TotalSalesAmount with a measure.

Answers are;

A. Split OrderDateTime into separate date and time columns.

D. Replace TotalSalesAmount with a measure.

A : Best practice

D : measure better than column

You have a Fabric tenant that contains a lakehouse named Lakehouse’. Lakehouse1 contains a table named Tablet. You are creating a new data pipeline. You plan to copy external data to Table’. The schema of the external data changes regularly.

You need the copy operation to meet the following requirements:

Replace Table1 with the schema of the external data.

Replace all the data in Table1 with the rows in the external data.

You add a Copy data activity to the pipeline.

What should you do for the Copy data activity?

From the Source tab, add additional columns.

From the Destination tab, set Table action to Overwrite.

From the Settings tab, select Enable staging.

From the Source tab, select Enable partition discovery.

From the Source tab, select Recursively.

Answer is From the Destination tab, set Table action to Overwrite.

Enable overwrite table option to overwrite the existing data and schema in the table using the new values

Reference:

https://learn.microsoft.com/en-us/fabric/data-factory/copy-data-activity#configure-your-source-under-the-source-tab

You have a Fabric tenant that contains a lakehouse named Lakehouse1. Lakehouse1 contains a subfolder named Subfolder1 that contains CSV files.

You need to convert the CSV files into the delta format that has V-Order optimization enabled.

What should you do from Lakehouse explorer?

Use the Load to Tables feature.

Create a new shortcut in the Files section.

Create a new shortcut in the Tables section.

Use the Optimize feature.

Answer is Use the Load to Tables feature.

With Load to tables; tables are always loaded using the Delta Lake table format with V-Order optimization enabled.

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/load-to-tables#load-to-table-capabilities-overview

You have a Fabric tenant that contains a lakehouse named Lakehouse1. Lakehouse1 contains an unpartitioned table named Table1.

You plan to copy data to Table1 and partition the table based on a date column in the source data.

You create a Copy activity to copy the data to Table1.

You need to specify the partition column in the Destination settings of the Copy activity.

What should you do first?

From the Destination tab, set Mode to Append.

From the Destination tab, select the partition column.

From the Source tab, select Enable partition discovery.

From the Destination tabs, set Mode to Overwrite.

Answer is From the Destination tabs, set Mode to Overwrite.

Expand Advanced, in Table action, select Overwrite, and then select Enable partition, under Partition columns, select Add column, and choose the column you want to use as the partition column. You can choose to use a single column or multiple columns as the partition column.

Reference:

https://learn.microsoft.com/en-us/fabric/data-factory/tutorial-lakehouse-partition#load-data-to-lakehouse-using-partition-columns

You have a Fabric tenant that contains a lakehouse.

You are using a Fabric notebook to save a large DataFrame by using the following code;

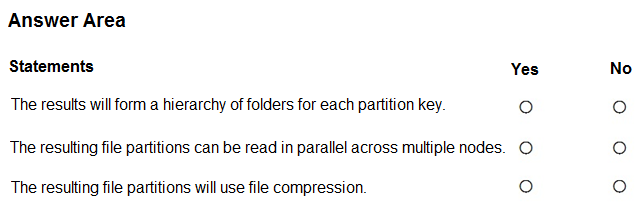

df.write.partitionBy(“year”, “month”, “day”).mode(“overwrite”).parquet(“Files/SalesOrder”)For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Check the answer section

Answer is Y-Y-Y

Parquet files are compressed by default, and you don’t need to take any additional actions to enable compression. When writing Parquet files, you can specify the desired compression codec (if needed) to further optimize storage and performance

You have a Fabric tenant that contains a data pipeline.

You need to ensure that the pipeline runs every four hours on Mondays and Fridays.

To what should you set Repeat for the schedule?

Daily

By the minute

Weekly

Hourly

Answer is Weekly

The only way to do this is to set the schedule to Weekly, set the days on Monday and Friday and add manually 6 Time of 4 hour intervals.

You have a Fabric workspace that uses the default Spark starter pool and runtime version 1.2.

You plan to read a CSV file named Sales_raw.csv in a lakehouse, select columns, and save the data as a Delta table to the managed area of the lakehouse. Sales_raw.csv contains 12 columns. You have the following code.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Check the answer section

Answer is N-YN-Y

1 No - because it is CSV. Spark will read the entire file then filters the columns. It will be read in full (in contrast to parquet)

2 No - well, maybe 0.5% slower due to creating a new files. But actually - no

3 Yes - because adding inferSchema as True WILL result in extra time in execution as it will make the engine go over the data twice (one to read data and the other time to read Schema).

You are creating a data flow in Fabric to ingest data from an Azure SQL database by using a T-SQL statement.

You need to ensure that any foldable Power Query transformation steps are processed by the Microsoft SQL Server engine.

How should you complete the code?

Check the answer section

Value.NativeQuery(Source, "SELECT DepartmentID, Name FROM HumanResources.Department WHERE GroupName = 'Research and Development' ", null, [EnableFolding = true])

Reference:

https://learn.microsoft.com/en-us/power-query/native-query-folding#use-valuenativequery-function

You have a Fabric tenant that contains a lakehouse named Lakehouse1.

Readings from 100 IoT devices are appended to a Delta table in Lakehouse1. Each set of readings is approximately 25 KB. Approximately 10 GB of data is received daily.

All the table and SparkSession settings are set to the default.

You discover that queries are slow to execute. In addition, the lakehouse storage contains data and log files that are no longer used.

You need to remove the files that are no longer used and combine small files into larger files with a target size of 1 GB per file.

What should you do?

Check the answer section

Answers are;

VACUUM: to remove old files no longer referenced.

OPTIMIZE: to create fewer files with a larger size.

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/delta-optimization-and-v-order?tabs=sparksql

https://docs.delta.io/latest/delta-utility.html#-delta-vacuum

https://docs.delta.io/latest/optimizations-oss.html

You have a Fabric tenant that contains a lakehouse named Lakehouse1. Lakehouse1 contains a table named Nyctaxi_raw. Nyctaxi_row contains the following table:

You create a Fabric notebook and attach it to Lakehouse1.

You need to use PySpark code to transform the data. The solution must meet the following requirements:

Add a column named pickupDate that will contain only the date portion of pickupDateTime.

Filter the DataFrame to include only rows where fareAmount is a positive number that is less than 100.

How should you complete the code?

Check the answer section

Answer is;

df.withColumn('pickupDate', df['pickupDateTime'].cast(DateType()))

.filter("fareAmount > 0 AND fareAmount < 100")

There is a typo at Date section.