DP-100: Designing and Implementing a Data Science Solution on Azure

You plan to use a Data Science Virtual Machine (DSVM) with the open source deep learning frameworks Caffe2 and PyTorch.

You need to select a pre-configured DSVM to support the frameworks.

What should you create?

Data Science Virtual Machine for Windows 2012

Data Science Virtual Machine for Linux (CentOS)

Geo AI Data Science Virtual Machine with ArcGIS

Data Science Virtual Machine for Windows 2016

Data Science Virtual Machine for Linux (Ubuntu)

Answer is Data Science Virtual Machine for Linux (Ubuntu).

Caffe2 and PyTorch is supported by Data Science Virtual Machine for Linux. Microsoft offers Linux editions of the DSVM on Ubuntu 16.04 LTS and CentOS 7.4. Only the DSVM on Ubuntu is preconfigured for Caffe2 and PyTorch.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/data-science-virtual-machine/overview

You plan to create a speech recognition deep learning model.

The model must support the latest version of Python.

You need to recommend a deep learning framework for speech recognition to include in the Data

Science Virtual Machine (DSVM).

What should you recommend?

Rattle

TensorFlow

Weka

Deeplearning4j

Answer is TensorFlow

TensorFlow is an open source library for numerical computation and large-scale machine learning. It uses Python to provide a convenient front-end API for building applications with the framework

TensorFlow can train and run deep neural networks for handwritten digit classification, image recognition, word embeddings, recurrent neural networks, sequence-to-sequence models for machine translation, natural language processing, and PDE (partial differential equation) based simulations.

Incorrect Answers:

Rattle is the R analytical tool that gets you started with data analytics and machine learning.

Weka is used for visual data mining and machine learning software in Java.

References:

https://www.infoworld.com/article/3278008/what-is-tensorflow-the-machine-learning-libraryexplained.html

You are developing a hands-on workshop to introduce Docker for Windows to attendees.

You need to ensure that workshop attendees can install Docker on their devices.

Which two prerequisite components should attendees install on the devices? Each correct answer presents part of the solution.

Microsoft Hardware-Assisted Virtualization Detection Tool

Kitematic

BIOS-enabled virtualization

VirtualBox

Windows 10 64-bit Professional

Answers are BIOS-enabled virtualization and Windows 10 64-bit Professional

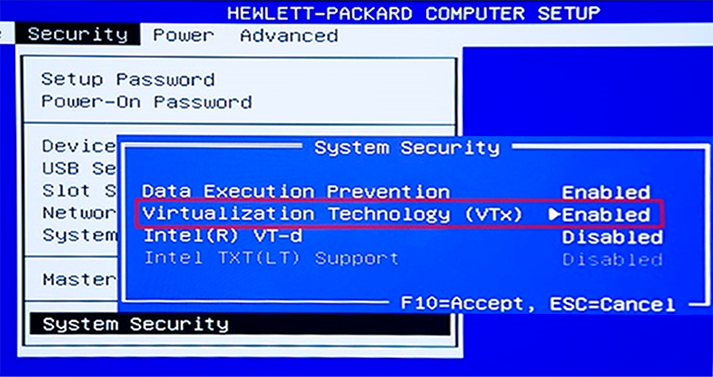

C: Make sure your Windows system supports Hardware Virtualization Technology and that virtualization is enabled.

Ensure that hardware virtualization support is turned on in the BIOS settings. For example:

E: To run Docker, your machine must have a 64-bit operating system running Windows 7 or higher.

References:

https://docs.docker.com/toolbox/toolbox_install_windows/

https://blogs.technet.microsoft.com/canitpro/2015/09/08/step-by-step-enabling-hyper-v-for-useon-windows-10/

Your team is building a data engineering and data science development environment.

The environment must support the following requirements:

- support Python and Scala

- compose data storage, movement, and processing services into automated data pipelines

- the same tool should be used for the orchestration of both data engineering and data science

- support workload isolation and interactive workloads

- enable scaling across a cluster of machines

You need to create the environment.

What should you do?

Build the environment in Apache Hive for HDInsight and use Azure Data Factory for orchestration.

Build the environment in Azure Databricks and use Azure Data Factory for orchestration.

Build the environment in Apache Spark for HDInsight and use Azure Container Instances for orchestration.

Build the environment in Azure Databricks and use Azure Container Instances for orchestration.

Answer is Build the environment in Azure Databricks and use Azure Data Factory for orchestration.

In Azure Databricks, we can create two different types of clusters.

Standard, these are the default clusters and can be used with Python, R, Scala and SQL High-concurrency

Azure Databricks is fully integrated with Azure Data Factory.

Incorrect Answer: Build the environment in Azure Databricks and use Azure Container Instances for orchestration.; Azure Container Instances is good for development or testing. Not suitable for production workloads.

References:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/data-science-and-machine-learning

You are developing deep learning models to analyze semi-structured, unstructured, and structured data types.

You have the following data available for model building:

- Video recordings of sporting events

- Transcripts of radio commentary about events

- Logs from related social media feeds captured during sporting events

You need to select an environment for creating the model.

Which environment should you use?

Azure Cognitive Services

Azure Data Lake Analytics

Azure HDInsight with Spark MLib

Azure Machine Learning Studio

Answer is Azure Cognitive Services.

Azure Cognitive Services expand on Microsoft’s evolving portfolio of machine learning APIs and enable developers to easily add cognitive features – such as emotion and video detection; facial, speech, and vision recognition; and speech and language understanding – into their applications. The goal of Azure Cognitive Services is to help developers create applications that can see, hear, speak, understand, and even begin to reason. The catalog of services within Azure Cognitive Services can be categorized into five main pillars - Vision, Speech, Language, Search, and Knowledge.

References:

https://docs.microsoft.com/en-us/azure/cognitive-services/welcome

You are moving a large dataset from Azure Machine Learning Studio to a Weka environment.

You need to format the data for the Weka environment.

Which module should you use?

Convert to CSV

Convert to Dataset

Convert to ARFF

Convert to SVMLight

Answer is Convert to ARFF

Use the Convert to ARFF module in Azure Machine Learning Studio, to convert datasets and results in Azure Machine Learning to the attribute-relation file format used by the Weka toolset. This format is known as ARFF.

The ARFF data specification for Weka supports multiple machine learning tasks, including data preprocessing, classification, and feature selection. In this format, data is organized by entites and their attributes, and is contained in a single text file.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/convert-to-arff

You are implementing a machine learning model to predict stock prices.

The model uses a PostgreSQL database and requires GPU processing.

You need to create a virtual machine that is pre-configured with the required tools.

What should you do?

Create a Data Science Virtual Machine (DSVM) Windows edition.

Create a Geo Al Data Science Virtual Machine (Geo-DSVM) Windows edition.

Create a Deep Learning Virtual Machine (DLVM) Linux edition.

Create a Deep Learning Virtual Machine (DLVM) Windows edition.

Create a Data Science Virtual Machine (DSVM) Linux edition.

Answer is Create a Data Science Virtual Machine (DSVM) Linux edition.

Incorrect Answers:

A and C: PostgreSQL (CentOS) is only available in the Linux Edition.

B: The Azure Geo AI Data Science VM (Geo-DSVM) delivers geospatial analytics capabilities from Microsoft's Data Science VM. Specifically, this VM extends the AI and data science toolkits in the Data Science VM by adding ESRI's market-leading ArcGIS Pro Geographic Information System.

D: DLVM is a template on top of DSVM image. In terms of the packages, GPU drivers etc are all there in the DSVM image. Mostly it is for convenience during creation where we only allow DLVM to be created on GPU VM instances on Azure.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/data-science-virtual-machine/overview

You must store data in Azure Blob Storage to support Azure Machine Learning.

You need to transfer the data into Azure Blob Storage.

What are three possible ways to achieve the goal?

Bulk Insert SQL Query

AzCopy

Python script

Azure Storage Explorer

Bulk Copy Program (BCP)

Answers are AzCopy, Python script and Azure Storage Explorer

You can move data to and from Azure Blob storage using different technologies:

- Azure Storage-Explorer

- AzCopy

- Python

- SSIS

References:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-azure-blob

You plan to use a Deep Learning Virtual Machine (DLVM) to train deep learning models using Compute Unified Device Architecture (CUDA) computations.

You need to configure the DLVM to support CUDA.

What should you implement?

Solid State Drives (SSD)

Computer Processing Unit (CPU) speed increase by using overclocking

Graphic Processing Unit (GPU)

High Random Access Memory (RAM) configuration

Intel Software Extensions (Intel SGX) technology

Answer is Graphic Processing Unit (GPU)

A Deep Learning Virtual Machine is a pre-configured environment for deep learning using GPU instances.

References:

https://azuremarketplace.microsoft.com/en-au/marketplace/apps/microsoft-ads.dsvm-deep-learning

You are planning to host practical training to acquaint staff with Docker for Windows.

Staff devices must support the installation of Docker.

Which of the following are requirements for this installation? Answer by dragging the correct options from the list to the answer area.

Check the answer section

The requirements for installing Docker on Windows:

Windows 10 Pro, Enterprise, or Education (64-bit)

Hyper-V and Containers Windows features must be enabled.

The CPU must support Hardware Virtualization.

At least 4GB of RAM.

Windows 10 Anniversary Update (Build 1607+) or Windows Server 2016.

A 64-bit processor.

These requirements should be checked before hosting the practical training to ensure that all staff devices meet the necessary specifications.

Reference:

https://docs.docker.com/toolbox/toolbox_install_windows/

https://blogs.technet.microsoft.com/canitpro/2015/09/08/step-by-step-enabling-hyper-v-for-use-on-windows-10/

https://docs.docker.com/docker-for-windows/install/